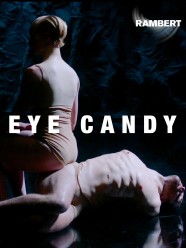

Eye Candy

Our body is the way we interact with the physical world, and it is also where our most instinctual parts live. It's where we feel pleasure and love, judge and destroy, bleed and smile. Since David and Venus, we've put the human body on the highest pedestal, making and keeping an unattainable beauty standard. Through this warped view of beauty, our natural shape has been sexualized, demonized, and sometimes shamed into a taboo. Why does a naked body make you feel bad? Does it have anything to do with politics? Aren't we more than what we seem?